I want to connect to resources on AWS from my home with the least operational overhead, leading me to deploy AWS Site-to-Site for connecting resources from my home to a VPC.

The Environment

Some resources I want to access are;

- G4dn.xlarge EC2 instances used for streaming games

- t2.micro EC2 instances hosting Home Assistant

- RDS (PostgreSQL) instances for hosting OpenStreetMap data

Home Environment

When setting up a connection from AWS to my home, I have to consider the following specifications;

- I live in West London, relatively close to the

eu-west-2data center- I have a VPC in

eu-west-2running on the10.1.0.0/16network

- I have a VPC in

- I use a publicly-offered ISP for accessing the internet

- There are two hops (routers) between the public internet and my home network

- The first hop is the router provided by the ISP to connect to the internet

- This network lives on the

192.168.0.0/24subnet

- This network lives on the

- The second hop is my own off-the-shelf router from ASUS running OpenWRT

- My home network lives on the

10.0.0.0/24subnet - The router has 8MB of usable storage for packages and configuration

- My home network lives on the

- The first hop is the router provided by the ISP to connect to the internet

Setting up AWS Site-to-Site

AWS Site-to-Site is one of Amazon’s offerings for bridging an external network to a VPC over the public internet. Some alternatives are;

- AWS Client VPN (based on OpenVPN)

- More expensive

- More complex, often tends to be slower without optimization

- Self-managed VPN

- Allows use of any VPN technology, such as Wireguard

- Allows custom metric monitoring

- Requires management of VPC topologies and firewalls

- Can be more expensive

I chose to use the Site-to-Site in this occasion so I could learn about how IPSec works in more detail, and saw it as a challenge in deploying to OpenWRT. It’s also a lot cheaper than a firewall license, EC2 rental and public IP charges.

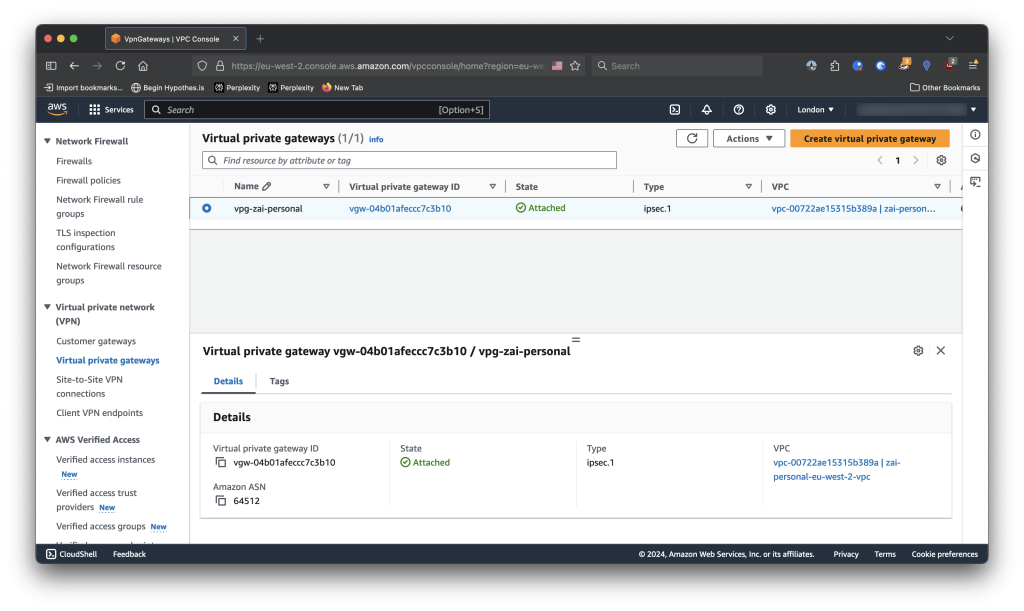

Deploying a Virtual Private Gateway

A Virtual Private Gateway is the AWS-side endpoint of an IPSec tunnel. It also hosts the configuration of the local BGP instance, and is what drives the propagation of routes between the IPSec tunnels and the VPC routing tables.

resource "aws_vpn_gateway" "main" {

vpc_id = data.aws_vpc.main.id

}There’s not much to configure with the VPG, so I left it with its’ defaults.

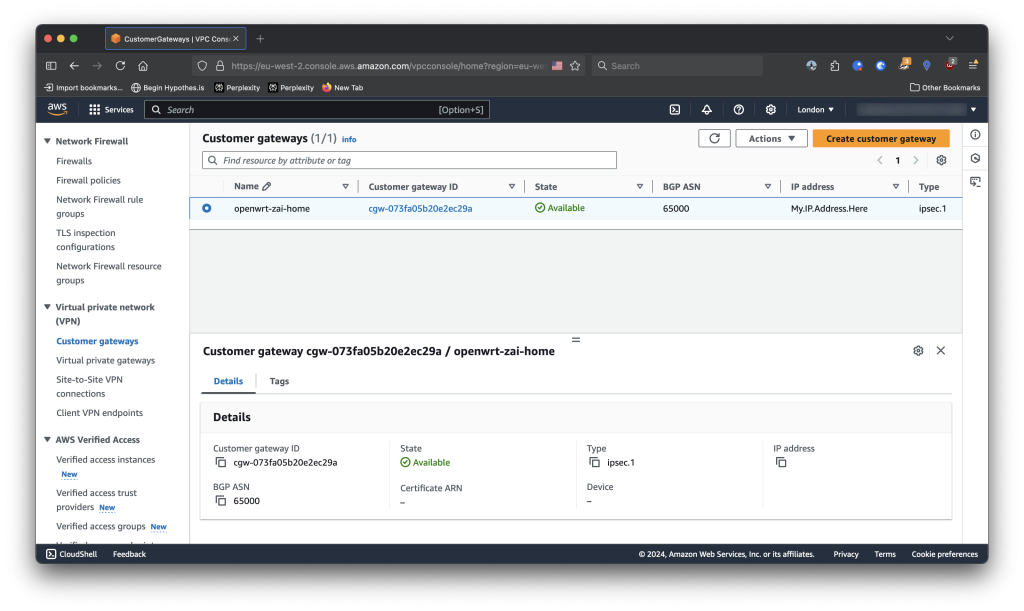

Deploying a Customer Gateway

A customer gateway represents the local end of the IPSec tunnel and the BGP daemon running on it. In my case, this is the OpenWRT router.

resource "aws_customer_gateway" "openwrt" {

bgp_asn = 65000

ip_address = "<WAN Address of OpenWRT>"

type = "ipsec.1"

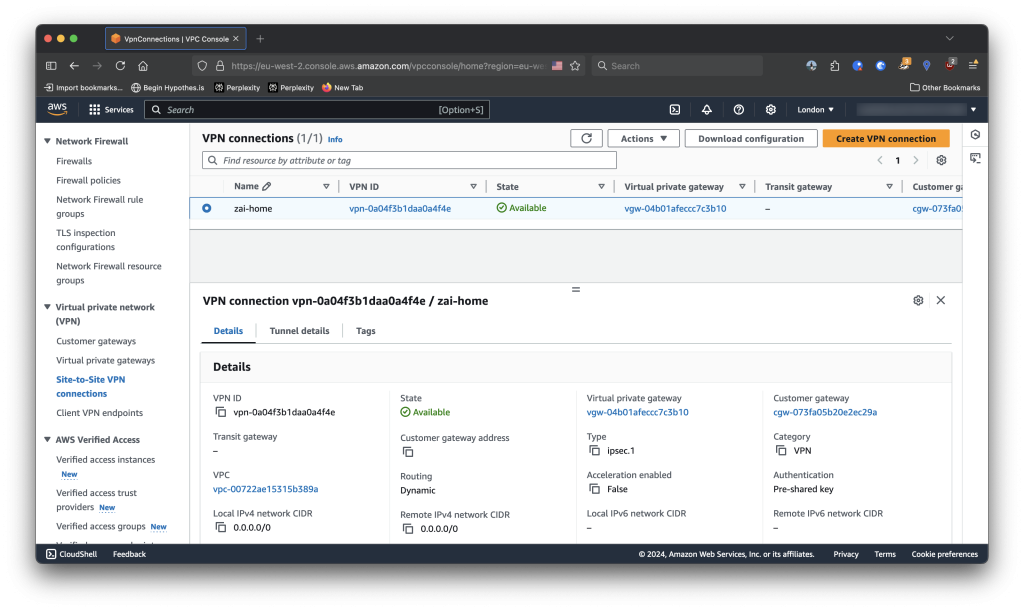

}Deploying a Site-to-Site VPN Connection

The VPN Connection itself is what connects a VPG (AWS Endpoint) to a customer gateway (Local endpoint) in the form of an IPSec VPN connection.

resource "aws_vpn_connection" "main" {

customer_gateway_id = aws_customer_gateway.openwrt.id

vpn_gateway_id = aws_vpn_gateway.main.id

type = aws_customer_gateway.openwrt.type

tunnel1_ike_versions = ["ikev1", "ikev2"]

tunnel1_phase1_dh_group_numbers = [2, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24]

tunnel1_phase1_encryption_algorithms = ["AES128", "AES128-GCM-16", "AES256", "AES256-GCM-16"]

tunnel1_phase1_integrity_algorithms = ["SHA1", "SHA2-256", "SHA2-384", "SHA2-512"]

tunnel1_phase2_dh_group_numbers = [2, 5, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24]

tunnel1_phase2_encryption_algorithms = ["AES128", "AES128-GCM-16", "AES256", "AES256-GCM-16"]

tunnel1_phase2_integrity_algorithms = ["SHA1", "SHA2-256", "SHA2-384", "SHA2-512"]

}These (tunnel1_*) are the default values set by AWS and should be locked down. For the purpose of testing, I left them all to their defaults. This settings are directly tied to the IPSec encryption settings described below.

Connecting OpenWRT via IPSec

Ansible Role Variables

I’ve designed my Ansible role to be able to configure AWS IPSec tunnels with the bare minimum configuration. All information that the role requires is provided by Terraform upon provisioning of the AWS Site-to-Site configuration.

bgp_remote_as: "64512"

ipsec_tunnels:

- ifid: "301"

name: xfrm0

inside_addr: <Inside IPv4>

gateway: <Endpoint Tunnel 1>

psk: <PSK Tunnel 1>

- ifid: "302"

name: xfrm1

inside_addr: <Inside IPv4>

gateway: <Endpoint Tunnel 2>

psk: <PSK Tunnel 2>bgp_remote_asrefers to the ASN of theVirtual Private Gateway, and is strictly used by the BGP Daemon offered by Quagga.- BGP is used to propagate routes to-and-from AWS.

- When a Site-to-Site VPN is configured to use Dynamic routing, it will state that the tunnel is Down if AWS cannot reach the BGP instance.

ipsec_tunnelsis used by XFRM and strongSwan to;- Build one XFRM interface per-tunnel

- Build one alias interface bound to each XFRM interface for static routing

- Configure the static routing of the XFRM interfaces

- Configure the BGP daemon neighbours

- Configure one IPSec endpoint per-tunnel

- Configure one IPSec tunnel for each XFRM interface

Required packages

I used three components for this workflow to function, and a last one for debugging security association errors.

strongswan-full- strongSwan provides an IPSec implementation for OpenWRT with full support for UCI. The

-fullvariation of the package is overkill, but you never know!

- strongSwan provides an IPSec implementation for OpenWRT with full support for UCI. The

quagga-bgpd- A BGP implementation light enough to run on OpenWRT.

quaggacomes in as a dependency

- A BGP implementation light enough to run on OpenWRT.

luci-proto-xfrm- A virtual interface for use by IPSec, where a tunnel requires a

vifto bind to.

- A virtual interface for use by IPSec, where a tunnel requires a

ip-full- Provides an

xfrmargument for debugging IPSec connections with.

- Provides an

name: install required packages

opkg:

name: "{{ item }}"

loop:

- strongswan-full

- quagga-bgpd

- luci-proto-xfrm

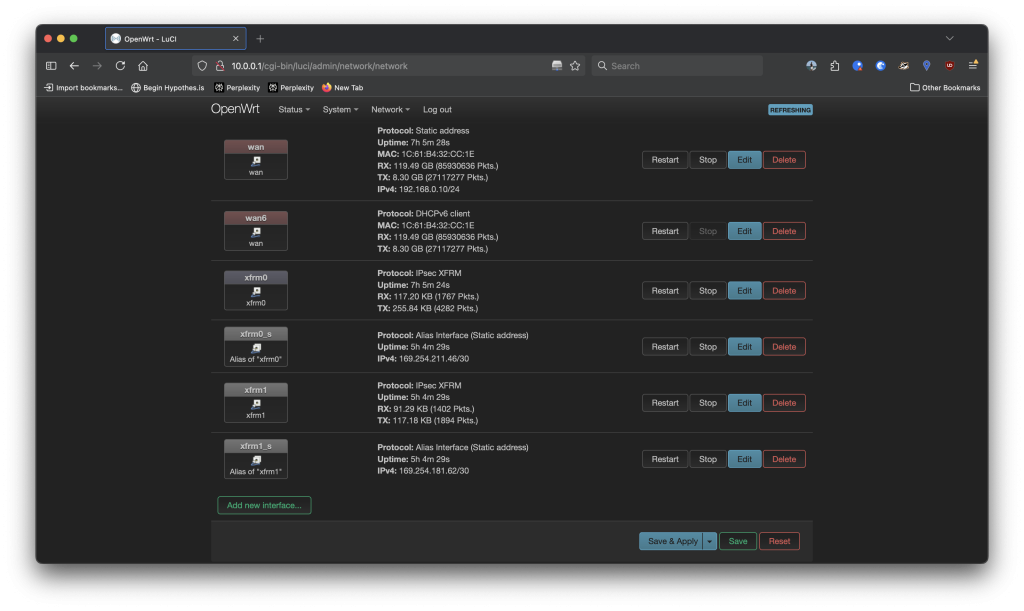

- ip-fullAdding the XFRM Interface

XFRM interfaces, one per VPN tunnel provided by AWS, each with an IPv4 assigned that matches the Inside IPv4 CIDRs defined within the AWS Site-to-site configuration. The IPv4 address is applied to an alias of the adapter rather than the adapter itself as the XFRM interface doesn’t support static IP addressing via UCI.Ansible Task

name: configure xfrm adapter

uci:

command: section

key: network

type: interface

name: "{{ item.name }}"

value:

tunlink: 'wan'

mtu: '1300'

proto: xfrm

ifid: "{{ item.ifid }}"

loop: "{{ ipsec_tunnels }}"/etc/config/network – UCI Configuration

config interface 'xfrm0'

option ifid '301'

option tunlink 'wan'

option mtu '1300'

option proto 'xfrm'

config interface 'xfrm1'

option tunlink 'wan'

option mtu '1300'

option proto 'xfrm'

option ifid '302'I use the uci task to deploy adapter configurations. I create one interface per-tunnel provided by AWS.

tunlinksets the IPSec tunnel to connect to & from thewaninterfacemtuis1300by default, I didn’t need to configure this valueifidis defined as strongSwan will use this to bind an IPSec tunnel to a network interface. This is separate from the name of the interface.

AWS needs to communicate with the BGP instance running on OpenWRT. The value of Inside IPv4 CIDR instructs AWS which IPs to listen on for their BGP instance, and which IP to connect to for fetching routes. The CIDRs will be restricted to the /30 prefix, which provides the range of 4 IP addresses, 2 of which are usable.

As an example, here is the Inside IPv4 CIDR of 169.254.181.60/30 and what that means.

| IP Index | IP Address | Responsibility |

|---|---|---|

| 0 | 169.254.181.60 | Network address |

| 1 | 169.254.181.61 | IP Address reserved for AWS-side of the IPSec tunnel |

| 2 | 169.254.181.62 | IP Address reserved for the OpenWRT-side of the IPSec tunnel |

| 3 | 169.254.181.63 | Broadcast address |

With this known, we know that;

| On the AWS side of the IPSec tunnel | On the OpenWRT side of the IPSec tunnel |

|---|---|

AWS has a BGP instance listening on the IP Address on the first index ( 169.254.181.61 ) | OpenWRT needs to be configured to use the IP address on the second index (169.254.181.62) |

AWS is expecting a BGP neighbour on the second index ( 169.254.181.62 ) | The BGP daemon running on OpenWRT needs to be configured to use the neighbor at the first index (169.254.181.61) |

AWS knows how to route traffic across the 169.254.181.60 network | OpenWRT needs to know to route traffic on the 169.254.181.60 network. |

Configuring the IP Address on the IPSec tunnel

I create an alias on top of the originating XFRM interface so that I can utilize the static protocol within UCI to configure static routing in a declarative way.

Ansible Task

name: create xfrm alias for static addressing

uci:

command: section

key: network

type: interface

name: "{{ item.name }}_s"

value:

proto: static

ipaddr:

- "{{ item.inside_addr | ipaddr('net') | ipaddr(2) }}"

device: "@{{ item.name }}"

loop: "{{ ipsec_tunnels }}"/etc/config/network – UCI Configuration

config interface 'xfrm0_s'

option proto 'static'

option device '@xfrm0'

list ipaddr '169.254.211.46/30'

config interface 'xfrm1_s'

option proto 'static'

list ipaddr '169.254.181.62/30'

option device '@xfrm1'I use ipaddr('net') | ipaddr(2) to simplify my Ansible configuration. inside_addr is 169.254.181.60/30 and these functions simply increase the IP address by two, giving the result of 169.254.181.62/30.

This will ensure two things;

- The xfrm interface persistently holds the

169.254.181.62/30IP Address - The Linux routing table holds a route of

169.254.181.60/30via the xfrm interface

This resolves the issue of OpenWRT knowing what IP Address to use and how to route the traffic.

Setting up IPSec

Because I’m using strongSwan, I can also use UCI to configure the IPSec tunnel. With this workflow, IPSec configuration is broken down into three elements;

- Endpoint

- Primarily what’s known as “IKE Phase 1”. This is the “How I will connect to the other end”.

- Tunnel

- Primarily known as “IKE Phase 2”. This is the “How do I pass traffic through to the other end”.

- Encryption

- A set of rules to describe how to handle the cryptography.

IPSec Encryption

What’s defined here drives whether Phase 1 will succeed, and must match the AWS VPN Encryption settings.

Ansible Task

name: define ipsec encryption

uci:

command: section

key: ipsec

type: crypto_proposal

name: "aws"

value:

is_esp: '1'

dh_group: modp1024

hash_algorithm: sha1/etc/config/ipsec – UCI Configuration

config crypto_proposal 'aws'

option is_esp '1'

option dh_group 'modp1024'

option encryption_algorithm 'aes128'

option hash_algorithm 'sha1'In my case, I’m;

- Using

AES128for encryption of the traffic - Using

SHA1as the integrity algorithm for ensuring packets are correct upon arrival - Naming the crypto_proposal

awsfor use by the Endpoint and the Tunnel

AES128 and SHA1 are supported by the configuration defined on the VPN configuration above.

Declaring the IPSec Endpoint

Ansible Task

name: configure ipsec remote

uci:

command: section

key: ipsec

type: remote

name: "{{ item.name }}_ep"

value:

enabled: "1"

gateway: "{{ item.gateway }}"

local_gateway: "<Public IP>"

local_ip: "10.0.0.1"

crypto_proposal:

- aws

tunnel:

- "{{ item.name }}"

authentication_method: psk

pre_shared_key: "{{ item.psk }}"

fragmentation: yes

keyingretries: '3'

dpddelay: '30s'

keyexchange: ikev2

loop: "{{ ipsec_tunnels }}"/etc/config/ipsec – UCI Configuration

config remote 'xfrm0_ep'

option enabled '1'

option gateway '<Tunnel 1 IP>'

option local_gateway '<Public IP>'

option local_ip '10.0.0.1'

list crypto_proposal 'ike2'

list tunnel 'xfrm0'

option authentication_method 'psk'

option pre_shared_key '<PSK>'

option fragmentation '1'

option keyingretries '3'

option dpddelay '30s'

option keyexchange 'ikev2'

config remote 'xfrm1_ep'

option enabled '1'

option gateway '<Tunnel 2 IP>'

option local_gateway '<Public IP>'

option local_ip '10.0.0.1'

list crypto_proposal 'ike2'

list tunnel 'xfrm1'

option authentication_method 'psk'

option pre_shared_key '<PSK>'

option fragmentation '1'

option keyingretries '3'

option dpddelay '30s'

option keyexchange 'ikev2'- The

gatewayis known as theOutside IP Addresson AWS local_gatewaypoints to the WAN Address of OpenWRTlocal_ippoints to the LAN address of OpenWRTcrypto_proposalpoints toaws(Defined above)tunnelpoints to the name of the interface that this IPSec endpoint represents.- Since there are two IPSec endpoints, two of these remotes are created. I use the interface name (from xfrm) across all duplicates to make sure that it’s visibly clear what’s being used where.

pre_shared_keyis the PSK that gets generated (or set) within theVPN Tunnel.- This is unique per-tunnel, meaning that there should be two different PSKs per Site-to-site VPN connection. They can be found under the

Modify VPN Tunnel Optionsselection.

- This is unique per-tunnel, meaning that there should be two different PSKs per Site-to-site VPN connection. They can be found under the

Configuring the IPSec Tunnel

The tunnel instructs strongSwan how to bind the IPSec tunnel to an interface. The key here is the ifid of the XFRM interfaces defined earlier.

Ansible Task

name: configure ipsec tunnel

uci:

command: section

key: ipsec

type: tunnel

name: "{{ item.name }}"

value:

startaction: start

closeaction: start

crypto_proposal: aws

dpdaction: start

if_id: "{{ item.ifid }}"

local_ip: "10.0.0.1"

local_subnet:

- 0.0.0.0/0

remote_subnet:

- 0.0.0.0/0

loop: "{{ ipsec_tunnels }}"/etc/config/ipsec – UCI Configuration

config tunnel 'xfrm0'

option startaction 'start'

option closeaction 'start'

option crypto_proposal 'ike2'

option dpdaction 'start'

option if_id '301'

option local_ip '10.0.0.1'

list local_subnet '0.0.0.0/0'

list remote_subnet '0.0.0.0/0'

config tunnel 'xfrm1'

option startaction 'start'

option closeaction 'start'

option crypto_proposal 'ike2'

option dpdaction 'start'

option if_id '302'

option local_ip '10.0.0.1'

list local_subnet '0.0.0.0/0'

list remote_subnet '0.0.0.0/0'- Like the AWS configuration, I define the

local_subnetandremote_subnetto0.0.0.0/0. This is so I can focus on testing connectivity. if_idpoints to the XFRM interface that’s representing the tunnel in iteration.- The

if_idmust match the tunnel in iteration, as theInside IPv4 CIDRshave been bound to an interface.

- The

Configuring BGP on OpenWRT

In order to apply BGP routes on the AWS-side, route propagation must be enabled on a routing table level. Otherwise, a static route pointing to my home IP Address (10.0.0.0/24) via the Virtual Private Gateway must be declared.

I opted for Quagga when using BGP on OpenWRT.

router bgp 65000

bgp router-id {{ ipsec_inside_cidrs[0] | ipaddr('net') | ipaddr(2) | split('/') | first }}

{% for ipsec_inside_cidr in ipsec_inside_cidrs %}

neighbor {{ ipsec_inside_cidr | ipaddr('net') | ipaddr(1) | split('/') | first }} remote-as {{ bgp_remote_as }}

neighbor {{ ipsec_inside_cidr | ipaddr('net') | ipaddr(1) | split('/') | first }} soft-reconfiguration inbound

neighbor {{ ipsec_inside_cidr | ipaddr('net') | ipaddr(1) | split('/') | first }} distribute-list localnet in

neighbor {{ ipsec_inside_cidr | ipaddr('net') | ipaddr(1) | split('/') | first }} distribute-list all out

neighbor {{ ipsec_inside_cidr | ipaddr('net') | ipaddr(1) | split('/') | first }} ebgp-multihop 2

{% endfor %}/etc/quagga/bgpd.conf – Rendered Template

router bgp 65000

bgp router-id 169.254.211.46

neighbor 169.254.211.45 remote-as 64512

neighbor 169.254.211.45 soft-reconfiguration inbound

neighbor 169.254.211.45 distribute-list localnet in

neighbor 169.254.211.45 distribute-list all out

neighbor 169.254.211.45 ebgp-multihop 2

neighbor 169.254.181.61 remote-as 64512

neighbor 169.254.181.61 soft-reconfiguration inbound

neighbor 169.254.181.61 distribute-list localnet in

neighbor 169.254.181.61 distribute-list all out

neighbor 169.254.181.61 ebgp-multihop 2- Like earlier, I use

ipaddr('net') | ipaddr(1)to increment the IP address from the CIDR remote-asdefines the AWS-side ASN.- BGP at its’ core defines routes based on path to AS, a layer on-top of IP Addresses.

- It’s designed to work with direct connections, not over-the-internet.

- ISPs & exchanges will, however, use BGP at their level to forward the traffic on.

router bgpstates what the ASN of the OpenWRT router is. Because I used the default of65000from AWS, I place that here.bgp router-idis set to the first XFRM interface’s IP address, since the same BGP instance will be shared by both tunnels in the event that one tunnel goes down. AWS does not do a validation check on the router-id.

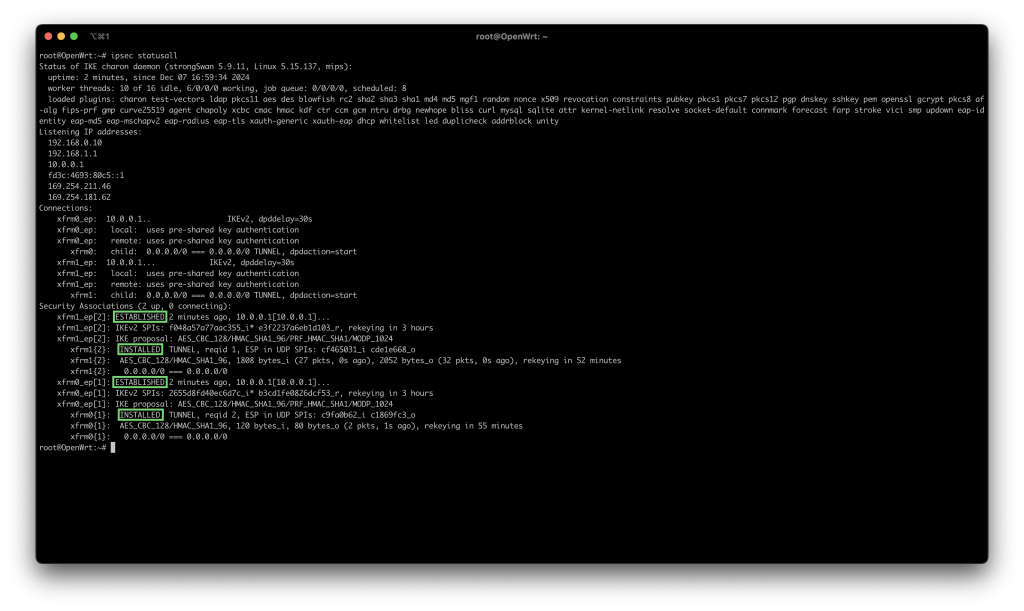

Verifying the connection to IPSec

Using the swanctl command, I can identify whether my applied configuration is successful when logged into my OpenWRT router using SSH.

Start swanctl

I don’t use the legacy ipsec init script, instead, directly using the swanctl one. Under the hood, this will convert the UCI configuration into a strongSwan configuration located at /var/swanctl/swanctl.conf

/etc/init.d/swanctl start

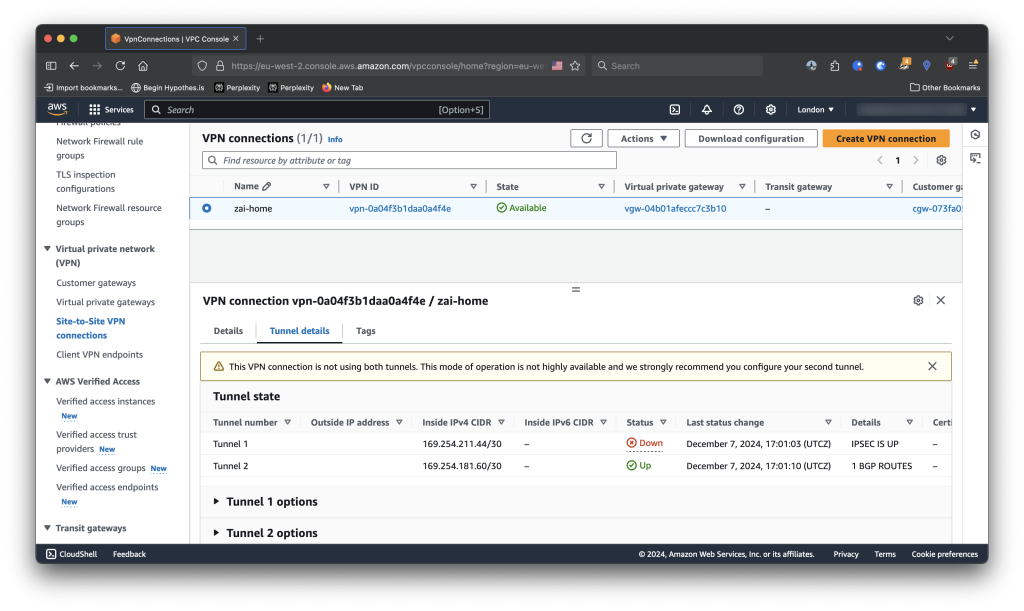

ipsec statusall

ipsec statusall command, where both VPN tunnels are ESTABLISHED and INSTALLED. Established denotes that IKE Phase 1 (Encryption negotiation) was successful and Installed denotes that IKE Phase 2 (Authorization, the tunnel creation itself) was successful and is now in use.

IPSEC IS DOWN, the connection was successful. Status is only up when BGP can be reached from AWS. When using Dynamic (not static) routing in the configuration for Site-to-Site, AWS doesn’t declare a connection up unless BGP is reachable at the second address available in the Inside IPv4 CIDR.Routing traffic to & from the XFRM Interface

I finally need to instruct OpenWRT to forward packets that are destined to xfrm0 or xfrm1 to be allowed. The fact that the Linux routing table will state that 10.1.0.0/24 is accessed via xfrm0, which is applied via BGP is enough to know that either xfrm0 or xfrm1 is the interface required.

By default, a flag of REJECT is defined. By applying the following firewall rule, packet successfully go through to the AWS VPC.

Ansible Task

name: install firewall zone

uci:

command: section

key: firewall

type: zone

find_by:

name: 'tunnel'

value:

input: REJECT

output: ACCEPT

forward: REJECT

network:

- xfrm0

- xfrm1name: install firewall forwarding

uci:

command: section

key: firewall

type: forwarding

find_by:

dest: 'tunnel'

value:

src: lan/etc/config/firewall – UCI Configuration

config zone

option name 'tunnel'

option input 'REJECT'

option output 'ACCEPT'

option forward 'REJECT'

list network 'xfrm0' 'xfrm1'

config forwarding

option src 'lan'

option dest 'tunnel'Final tasks

The final steps of the Ansible playbook is to instruct the UCI framework to save the changes to the disk, and to reload the configuration of all services required.

name: commit changes

uci:

command: commitname: enable required services

service:

name: "{{ item }}"

enabled: yes

state: reloaded

loop:

- swanctl

- quagga

- networkI then invoke the Ansible playbook by using a local-exec provisioner on a null_resource within terraform, where the AWS Site-to-Site resource is a dependency. Along the lines of:

resource "null_resource" "cluster" {

provisioner "local-exec" {

command = <<EOT

ansible-playbook \

-I ${var.openwrt_address}, \

-e 'aws_tunnel_ips=${aws_vpn_connection.main.tunnel1_address},${aws_vpn_connection.tunnel2_address}'

playbook.yaml \

-e 'aws_psk=${aws_vpn_connection.main.tunnel1_preshared_key},${aws_vpn_connection.main.tunnel2_preshared_key}'

playbook.yaml

EOT

}

}This is a shortened version of what I have, but by simply piping the Ansible playbook with the outputs of the AWS Site-to-Site Resource, my router is automatically configured correctly when I create a Site-to-Site resource.

With IPSec now deployed, I can communicate directly with my resources hosted on AWS as if it were local.